Projects

[2019a] Multiple Vehicle Detection, Tracking, and Speed Estimation in Unmanned Aerial Videos. link

This project developed a deep-learning pipeline for multiple vehicle detection and tracking from UAV videos. This approach works reliably for vehicle tracking with significant orientation and scale variations. The vehicle speed estimation method is currently under development.

[2019b] AlphaPilot AI Drone Challenge Machine Vision Test. link

The project contains two parts: a CNN for window corner position detection of AlphaPilot machine vision test and a ROS perception node “winnet” for the future closed-loop controls.

[2019c] Mapping Quality Evaluation of Monocular SLAM Solutions for UAVs. link

The geometric quality of the 3D maps generated by the state-of-the-art monocular SLAM solutions (LSD-SLAM, ORB-SLAM2, and LDSO) implemented on a low-cost MAV is evaluated.

The point cloud extracted by LSD-SLAM is compared to a terrestrial laser scanned reference point cloud.

[2018a] Imitation Learning for a Self-driving Car in Unity Simulator. link

The goal of the project is to train a CNN to drive autonomously in a simulator. The project is a simulation implementation of the “End to End Learning for Self-Driving Cars” paper by NVIDIA. The CNN implemented in the project is differnet to the one in the paper, more details are explained here.

[2018b] Kidnapped Robot. link

In this project, a 2-dimensional particle filter in C++ was implemented. The particle filter was given a map (landmarks) and initial location based on GPS (x, y) and orientation based on IMU (yaw) with uncertainties. At each time step, the filter will also get noisy landmark observations during the car moving. A real-time pose estimation of the vehicle is obtained.

[2018c] Vehicle State Estimation from LiDAR and Radar Measurements. link

In this project we utilized a Extended Kalman Filter to estimate the state of a moving car with noisy LiDAR and Radar measurements in a simulator. A Kalman Filter to process only laser measurements was also conducted here.

[2018d] Vehicle State Estimation from LiDAR and Radar Measurements. link

In this project we utilized a Unscented Kalman Filter to estimate the state of a moving car with noisy LiDAR and Radar measurements in a simulator.

- Kalman Filter (KF) / Extended KF / Unscented KF

- Physical Model

- Linear: KF

- Non-linear: EKF / UKF

- Linearization approximation

- One point using Taylor expansion: EKF

- Multi-points using Sigma points method: UKF

- Linearization approximation

- Physical Model

[2018e] Vehicle steering and throttle PID Control. link

Two PID controllers for steering and throttle in C++ to maneuver the vehicle around tracks in a simulator are developed. The input is the cross-track error (CTE) measured in the simulator, the outputs are the steering and throttle commands.

[2018f] Vehicle MPC Control. link

A vehicle MPC control vehicle around tracks in a simulator is developed. The cost function consists of components to minimize the cross-track error, the heading error, the velocity error, the steering and throttle effort, and the gap between sequential steering and throttle values.

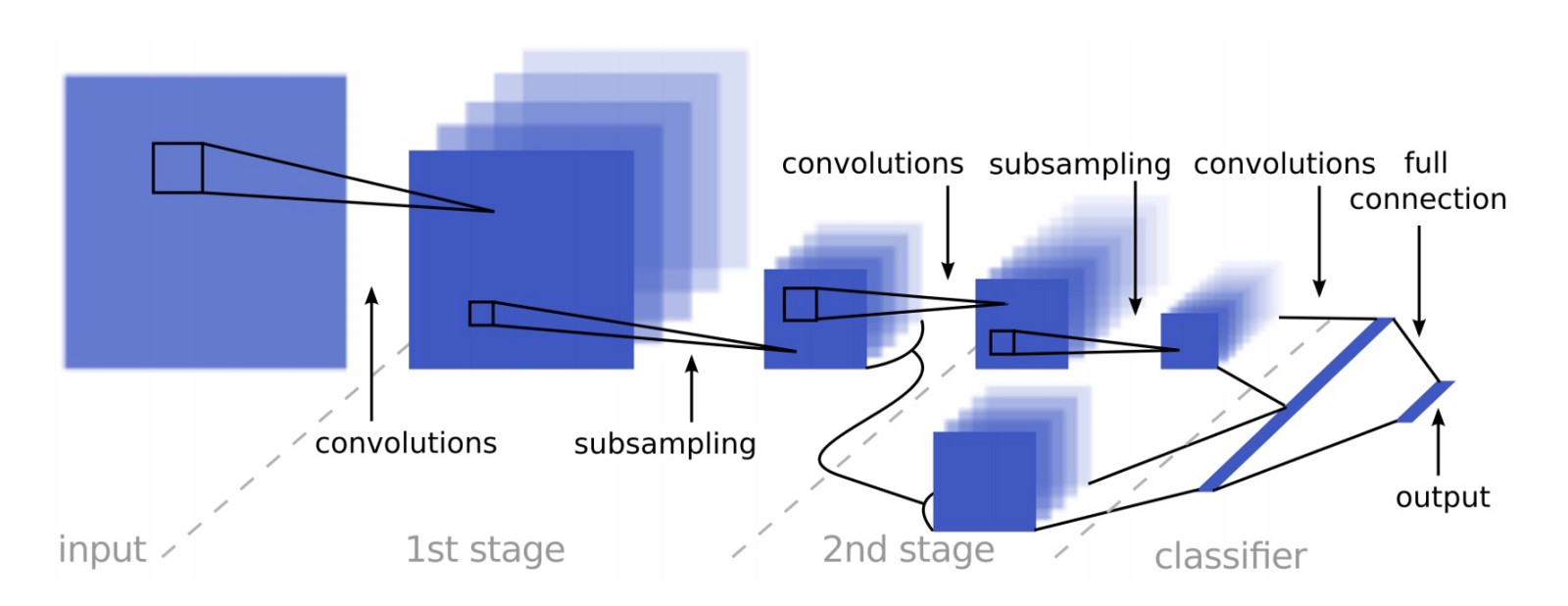

[2017a] A CNN Traffic Sign Classifer. link

In this project, a convolutional neural networks, improved LeNet is trained to classify traffic signs.

[2017b] Lane Lines Detection. link

In this project, lane detector takes a video as input and display the lane boundaries in the image space. The project comprises two parts: image pre‐processing and lane detection and display. The image pre-processing includes camera calibration, distortion correction, color and gradient binary, and perspective transform; the second part contains lane lines detection and visual display.

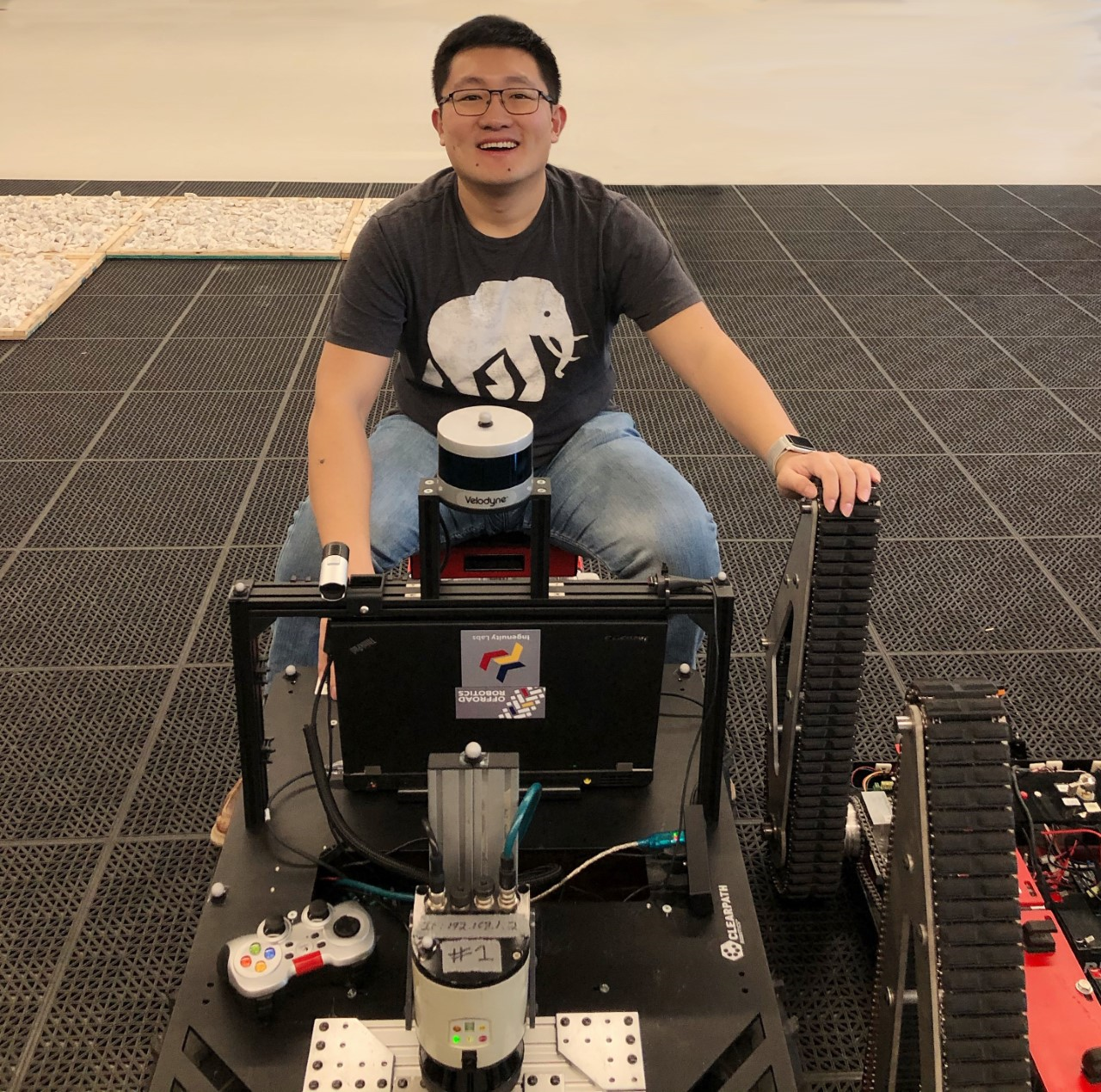

[2011 - 2017] PhD Project: Autonomous Locomotion Mode Transition of a Track-legged Quadruped Robot Step Negotiation. link

This work proposed a method to realize an autonomous locomotion mode transition of a tracklegged quadruped robot’s steps negotiation. The autonomy of the decision-making process was realized by the proposed criterion to comparing energy performances of the rolling and walking locomotion modes. Two climbing gaits were proposed to achieve smooth steps negotiation behaviours for the energy evaluation purposes. Simulations showed autonomous locomotion mode transitions were realized for negotiations of steps with different height. The proposed method is generic enough to be utilized to other hybrid robots after some pre-studies of their locomotion’s energy performances.

Full Body Step Negotiation Gait:

Locomotion Mode Transition: